[originally posted at https://www.lesswrong.com/posts/ALsuxpdqeTXwgEJeZ/could-a-superintelligence-deduce-general-relativity-from-a]

Introduction:

In the article/short story “That Alien Message”, Yudkowsky writes the following passage, as part of a general point about how powerful super-intelligences could be:

Riemann invented his geometries before Einstein had a use for them; the physics of our universe is not that complicated in an absolute sense. A Bayesian superintelligence, hooked up to a webcam, would invent General Relativity as a hypothesis—perhaps not the dominant hypothesis, compared to Newtonian mechanics, but still a hypothesis under direct consideration—by the time it had seen the third frame of a falling apple. It might guess it from the first frame, if it saw the statics of a bent blade of grass.

As a computational physicist, this passage really stuck out to me. I think I can prove that this passage is wrong, or at least misleading. In this post I will cover a wide range of arguments as to why I don't think it holds up.

Before continuing, I want to state my interpretation of the passage, which is what I’ll be arguing against.

1. Upon seeing three frames of a falling apple and with no other information, a superintelligence would assign a high probability to Newtonian mechanics, including Newtonian gravity. So if it was ranking potential laws of physics by likelihood, “Objects are attracted to each other by their masses in the form F=Gmm/r2” would be near the top of the list.

2. Upon seeing only one frame of a falling apple and one frame of a single blade of grass, a superintelligence would assign a decently high likelihood to the theory of general relativity as put forward by Einstein.

This is not the only interpretation of the passage. It could just be saying that an AI would “invent” general relativity in terms of inventing the equations just by idly playing around, like mathematicians playing with 57 dimensional geometries or whatever. However, the phrase “under active consideration”, and “dominant hypothesis” imply that these aren’t just inventions, these are deductions. A monkey on a typewriter could write down the equations for Newtonian gravity, but that doesn’t mean they deduced it. What matters is whether the knowledge gained from the frames could be used to achieve things.

I’m not interested in playing semantics or trying to read minds here. My interpretation above seems to be what the commenters took the passage to mean. I’m mainly using the passage as a starting off point for discussion about the limits of first principles computations.

Here is a frame of an apple, and a frame of a field of grass.

I encourage you to ponder these images in detail. Try and think for yourself the most plausible method for a superintelligence to deduce general relativity from one apple image and one grass image.

I’m going to assume that the AI can somehow figure out the encoding of the images and actually “see” images like the ones above. This is by no means guaranteed, see the comments of this post for a debate on the matter.

What can be deduced:

It’s true that the laws of physics are not that complicated to write down. Doing the math properly would not be an issue for anything we would consider a “superintelligence”.

It’s also true that going from 0 images of the world to 1 image imparts a gigantic amount of information. Before seeing the image, the number of potential worlds it could be in is infinite and unconstrained, apart from cogito ergo sum. After seeing 1 image, all potential worlds that are incompatible with the image can be ruled out, and many more worlds can be deemed unlikely. This still leaves behind an infinite number of potential plausible worlds, but there are significantly more constraints on those worlds.

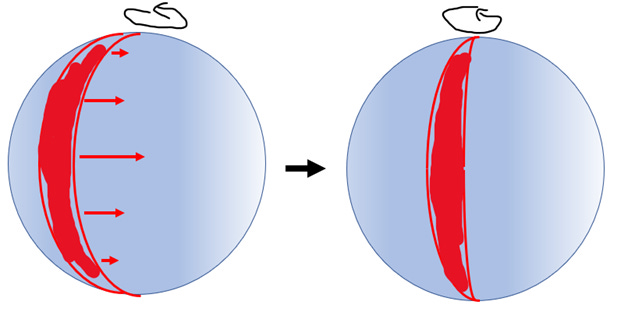

With 2 image, the constraints on worlds increases further, again chucking out large swathes of world-space.I could see how an AI might deduce that “objects” exist, and that they exist in three dimensions, from 2 images where the apple has slightly rotated.Below I have sketched a plausible path for this:

It can note that there are correlated patterns between the image that appear to be the same thing, corresponding to variations in the areas that are “red”. Of course, it doesn’t know what “red” is, it’s just looking at the “RGB” values of the pixels. It can see that the points towards the center of the object have moved more than those at the edges, in a steadily varying pattern. It can note that some patterns on one edge have “disappeared”, while on the other side, new patterns have emerged. It can also note that none of these effects have occurred outside the “red thing”. This all is enough to guess that the red thing is a moving object in 3-dimensional space, projected onto a 2d image.

Having 3 frames of the world would also produce a massive update, in that you could start looking at the evolution of the world. It could deduce that objects can move over time, and that movement can be non-linear in time, paving the way for acceleration theories.

If you just think about these initial massive updates, you might get the impression that a super-AI would quickly know pretty much anything. But you should always beware extrapolating from small amounts of early data. In fact, this discovery process is subject to diminishing returns, and they diminish fast. And at every point, there are still an infinite number of possible worlds to sift through.

With the next couple of frames, the AI could get a higher and higher probability that the apple is falling at a constant acceleration. But once that’s been deduced, each extra frame starts giving less and less new information. What does frame 21 tell you that you haven’t already deduced from the first 20? Frame 2001 from the first 2000?

Talking about the upper limits of Solomonoff induction misleads how correlated information is in practice. Taking a picture with a 400 megapixel camera will not give you much more information than a Gameboy camera if the picture is taken in a pitch black room with the lens cap on. More raw information does not help if it’s not useful.

Are falling apples evidence against Newtonian gravity?

Newtonian gravity states that objects are attracted to each other in proportion to their mass. A webcam video of two apples falling will show two objects, of slightly differing masses, accelerating at the exact same rate in the same direction, and not towards each other. When you don’t know about the earth or the mechanics of the solar system, this observation points against Newtonian gravity.

It is impossible to detect the gravitational force that apples exert towards each other on a webcam. Take two apples that are 0.1 kg each, half a meter apart. Under Newtonian physics, the gravitation force between them would be approximately 2e-12 Newtons, which in complete isolation would lead to an acceleration of 2e-12 m/s2. Note that 2e-12 meters is ten times smaller than the radius of a hydrogen atom. If you left them 0.5 meters apart in a complete vacuum with only gravitational force, it would take 5 days for them to collide. If you only watched them for 10 seconds, they would have only travelled 1 angstrom: the diameter of a hydrogen atom.

In reality, there are far more forces acting on the apples. The force of a gentle breeze is roughly 5 N/m2, or 0.02 N on an apple. Miniscule fluctuations in wind speed will have a greater effect on the apples than their gravitational force towards each other.

You might try and consider a way, with a gazillion videos of apples falling, to generate enough statistical power to detect the entirely miniscule bias in motion induced by gravity over the noise of random wind. Except that, wind direction is far from perfectly random. It’s entirely plausible that putting two apples together will alter the flow of air so as to ever so slightly “pull” them together. This make distinguishing the “pull” of gravity and the “pull” of air literally impossible, especially when you consider that the AI does not know what “wind” is.

The point of this is that in order to make scientific deductions, you need to be able to isolate variables. You can measure the gravity force between objects without looking at astronomical data, as Cavendish did centuries ago to calculate G using two balls. But that experiment required using specialized equipment specifically built for that purpose, including a massive 160 kg ball, a specialized torsion balance apparatus, and a specialized cage around the entire thing to ensure absolutely zero wind or outside vibration occurred. The final result of which was a rod rotating 50 millionth of an angular degree. This type of experiment cannot be conducted by accident.

Alternate theories:

Ockham's razor will tell you that the most likely explanation for not detecting a force between two objects is that no such force exists.

Sure, the webcam has “detected gravity”, in that is sees objects accelerate downwards at the same rate. But it has not detected that this acceleration is caused by masses attracting each other. This acceleration could in fact be caused by anything.

For example, the acceleration of the apples could be caused by the camera being on a spaceship that is accelerating at a constant rate. Or the ground just exerts a constant force on all objects. Perhaps there is an unseen property like charge that determines the attraction of objects to the ground. Or perhaps the other laws of newton don’t apply either, and objects just naturally accelerate unless stopped by external forces.

The AI could figure out that the explanation “masses attract each other” is possible, of course. But it requires postulating the existence of an unseen object offscreen that is 25 orders of magnitude more massive than anything it can see, with a center of mass that is roughly 6 or 7 orders of magnitude farther away than anything it can see in it’s field of view. Ockham's razor is not kind to such a theory, compared to the other theories I proposed above, which require zero implausibly large offscreen masses.

Even among theories where masses do attract each other, there are a lot of alternate theories to consider. Why should gravitational force be proportional to mM/r2, and not mM/r3 or mM/log(r), or mM? Or maybe there's a different, unseen property in the equation, like charge? In order to check this, you need to see how the gravitational force is different at different distances, and the webcam does not have to precision to do this.

Newtonian vs general relativity:

I have kept my discussion to Newtonian physics so far for ease of discussion.I think it goes without saying that if you can't derive Newtonian gravity, deriving general relativity is a pipe dream.

The big deciding factor defending general relativity is that the precession of mercury was off from that of Newtonian physics by 38 arcseconds (about a hundredth of an angular degree) every century. This was detected with extreme precision using precise astronomical observations using telescopes over a period of centuries.

The other test was an observation that light that grazes the sun will be deflected by 1.75 arcseconds (about a thousandth of a degree). This was confirmed by using specialized photography equipment specifically during a solar eclipse.

Unless there happened to be astronomical data in the background of the falling apple, none of this is within reach of the super-intelligence.

All of the reasoning above about why you can’t detect the force between two apples applies way more to detecting the difference between Newtonian and general relativity versions of gravity. There is just absolutely no reason to consider general relativity at all when simpler versions of physics explain absolutely all observations you have ever encountered (which in this case is 2 frames).

Blades of grass and unknown variables:

Looking at the statics of several blades of grass, there are some vague things you could figure out. You could, say, make a simple model of the grass as a sheet of uniform density and width, with a “sticking force” between grass, and notice that a Monte Carlo simulation of different initial conditions gives rise to similar shapes as the grass, when you assume the presence of a downwards force.

However, the AI will quickly run into problems. You don’t know the source of the sticking force, you don’t know the density of the grass, you don’t know the mass of the grass. For similar reasons to the balls above, the negligible attraction force between blades are impossible to protect, because they are drowned out by the unknown variables governing the grass. The “sticking force” that keeps grass upright is governed by internal biology such as “vacuoles”, the internal structure of which are also undetectable by webcams.

More exotic suggestions run into this unknown variable problem as well. In the comments people suggested that detecting chromatic aberration would help somehow. Well, it’s possible the AI could notice that the red channel, the blue channel, and the green channel are acting differently, and model it as differing bending of “light”. But then what? Even if you could somehow “undo” the RGB filter of the camera to deduce the spectrum of light, so what? The blackbody spectrum is a function of temperature, and you don’t know what temperature is. It is just as ill equipped to deduce quantum physics as it is to deduce relativity.

The laws of physics were painstakingly deduced by isolating one variable at a time, and combining all of human knowledge via specialized experiments over hundreds of years. An AI could definitely do it way, way faster than this with the same information. But it can’t do it without the actual experiments.

Simulation theories:

Remember those pictures from earlier? Well I confess, I pulled a little trick on the reader here. I know for a fact that it is impossible to derive general relativity from those two pictures, because neither of them are real. The apple is from this CGI video, the grass is from this blender project. In neither case are Newtonian gravity or general relativity included in the relevant codes.

The world the AI is in is quite likely to be a mere simulation, potentially a highly advanced one. Any laws of physics or that are possible in a simulation are possible physics that the AI has to consider. If you can imagine programming into a physics engine, it’s a viable option.

And if you are in a simulation, Ockham's razor becomes significantly stronger. If your simulation is on the scale of a falling apple, programming general relativity into it is a ridiculous waste of time and energy. The simulators will only input the simplest laws of physics necessary for their purposes.

This means that you can’t rely on things arguments like “It might turn out apples can’t exist without general relativity somehow”. I’m skeptical of this of course, but it doesn’t matter. Simulations of apples definitely can exist with any properties you want. Powerful AI’s can exist in any sufficiently complex simulation. Theoretically you could make one in minecraft.

Conclusion:

As a computational physicist with a PHD, it may seem like overkill to write a 3000 word analysis of one paragraph from a 15 year old sci-fi short story on a pop-science blog. I did it anyway, mainly because it was fun, but also because it helps sketch out some of the limitations of pure intelligence.

Intelligence is not magic. To make deductions, you need observations, and those observations need to be fit for purpose. Variables and unknowns need to be isolated or removed, the thing you are trying to observe needs to be on the right scale as your measuring equipment. Specialized equipment is often required to be custom built for what is being tested. Every one of these are fundamental limitations that no amount of intelligence can overcame.

Of course, there is no way a real AGI would be anywhere near as constrained as the hypothetical one in this post, and I doubt hearing about the limitations of a 2 frame AI is that reassuring to anyone. But the principles I covered here still apply. There will be deductions that even the greatest intelligence cannot make, things it cannot do, without the right observations and equipment.

In a future post, I will explore in greater detail the contemporary limits of deduction, and their implications for AI safety.

Great article, I think it's even worse than you presented here.

> Take two apples that are 0.1 kg each [...] The AI could figure out that the explanation “masses attract each other” is possible, of course.

Even assuming it conceptualizes mass, how could it deduce the amount from a couple frames? The apple could be like a helium balloon, heck it could be like anti-matter which until recently some scientists thought might not fall down.

> Simulation theories: [...] Any laws of physics or that are possible in a simulation are possible physics that the AI has to consider.

You know what's worse for this deduction than simulation theories? Art theories. I might just be showing the AI an animation I made which could be following no physics at all.